Background

Honestly I do not have the time for a whole spiel, but I’ve come to realize that the variety and volume of OCR errors in transcribing the taxonomic names in the ANSP corpus has negative consequences for the NLP workflow further down the line. In today’s adventure, we’ll find and correct as many taxonomic names as we can (and probably ignore the rest. Pragmatism wins the day!)

Workspace setup

Load the Libraries we need.

Clean the text files because R can get caught up on symbols and things.

# creates the list of all the txt files in the directory

listtxt <- list.files("/Users/thalassa/Rcode/blog/data/animals", # ANSP text files

pattern = "*.txt", full.names = T, recursive = FALSE)

outlist <- gsub(".txt", "-clean.txt", listtxt) # change the file name for the cleaned text files

for (k in 1:length(listtxt)){ # for each text file in the directory specified above

txt <- readLines(listtxt[[k]]) # Load the text file itself

txtcln <- gsub("[^[:alnum:][:space:]'.]", "", txt) # keep only letters, numbers, spaces, apostrophes and periods

myfile <- outlist[[k]]

write_lines(txtcln, myfile)

}

Wow that ran quickly!

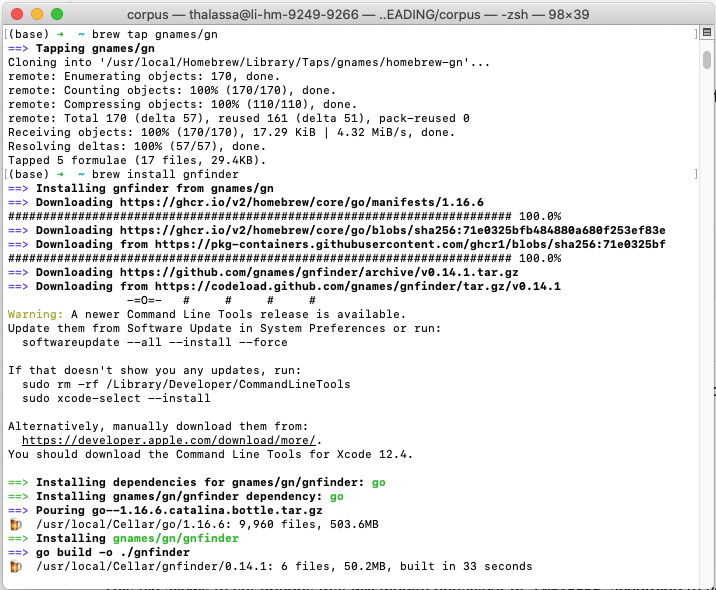

Install and configure GNfinder

Recall that you need to have GNfinder installed on your machine to run it.

To run GNfinder1, see the instructions here. I am a Mac user, and I used the Homebrew option.

Running GNfinder

Run gnfinder on smaller parts of the Proceedings of the Academy of Natural Sciences of Philadelphia. Reminder: this runs in a bash chunk.

for f in /Users/thalassa/Rcode/blog/data/animals/*-clean.txt

do

echo "Processing $f file..."

gnfinder "$f" --verify > "${f%.txt}-gnf.txt"

doneTake the list of GNfinder results and the corresponding list of text file with the ANSP content. Use the GNfinder corrected taxonomic name to replace the possibly misspelled or slightly wrong verbatim name from the text. Save the cleaned text files.

# creates the list of all the txt files in the directory

listgnf <- list.files("/Users/thalassa/Rcode/blog/data/gnfinder-parts-clean", #GNfinder output

pattern = "*gnf.txt", full.names = T, recursive = FALSE)

listtxt <- list.files("/Users/thalassa/Rcode/blog/data/animals", # ANSP text files

pattern = "*clean.txt", full.names = T, recursive = FALSE)

outlist <- gsub("clean.txt", "-clean-taxa.txt", listtxt) # change the file name for the cleaned text files

for (k in 1:length(listtxt)){ # for each text file in the directory specified above

dat <- as_tibble(read_csv(listgnf[[k]])) # Load the corresponding GNfinder info

txt <- readLines(listtxt[[k]]) # Load the text file itself

for (j in 0:length(dat)){ # for each taxonomic name in the GNfinder results

txt <- gsub(dat[j,2],dat[j,3],txt) # substitute the corrected "Name" for the "Verbatim" text

myfile <- outlist[[k]]

write_lines(txt, myfile)

}

}

rm(listgnf, listtxt, outlist, dat, j, k, myfile) # clean up!

That’s it - there is now a txt file with taxonomic names for every volume of the ANSP corpus. For the purposes of pulling identified taxonomic names into a generalized spaCy pipeline across any given section of the corpus, we’ll want one YUGE jsonl file with all of the results we just created. We need to merge, clean up gremlins and de-duplicate. Let’s do it!

#list all the files in the folder

listfile <- as_tibble(list.files("/Users/thalassa/Rcode/blog/data/taxa-verbatim-txt",

pattern = ".txt", full.names = T, recursive = FALSE))

data <- as_tibble(read_lines(listfile[[1]][1]))

for (k in 2:length(listfile[[1]])){

dat <- as_tibble(read_lines(listfile[[1]][k]))

data <- bind_rows(data, dat)

}

write_csv(data, "/Users/thalassa/Rcode/blog/data/ansp-taxa-clean.txt")

rm(ldf, listfile, dat, k) # clean up!

Mozzherin, Dmitry, Alexander Myltsev, and Harsh Zalavadiya. Gnames/Gnfinder: V0.14.2. Zenodo, 2021. https://doi.org/10.5281/zenodo.5111562.↩︎